Interactive & Explainable Machine Learning

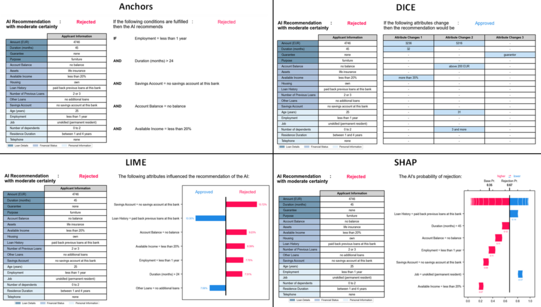

Designing the Representation of local Explanations of model-agnostic XAI Methods to improve the Transparency of underlying AI Systems

In Explainable Artificial Intelligence (XAI) research, various local model-agnostic methods have been proposed to explain individual predictions to users. However, little attention has been paid to the user perspective, resulting in a lack of understanding of how users evaluate local model-agnostic explanations. To address this research gap, in this project we revised the representation of local explanations of four established model-agnostic XAI methods through an iterative design process. We then evaluated them using eye-tracking technology, surveys, and interviews with users. With this project, we contribute to ongoing research on improving the transparency of underlying AI systems.

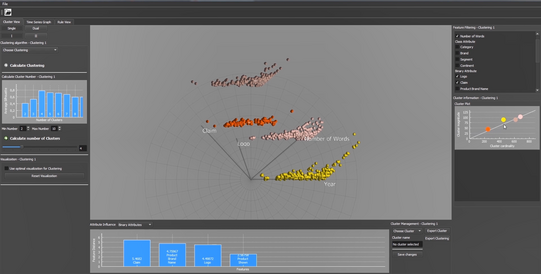

Development of an interactive Machine Learning System for the Analysis of Print Advertisements

Advertising is ubiquitous and has a major impact on consumer behavior. Given that advertising aims to be memorable and attract attention, it seems striking that previous research in business and management has largely neglected the content and style of actual print ads and their evolution over time. With this in mind, we collected more than one million print advertisements from the English-language news magazine The Economist from 1843 to 2014. However, there is a lack of interactive intelligent systems capable of processing such a large amount of image data and allowing users to add metadata, explore images, derive theses, and use machine learning techniques that they did not have access to before. Inspired by the research field of interactive machine learning, we propose such a system that enables marketers to process and analyze this large collection of print ads.